I’ll barge straight in.

I’ve spent a considerable part of the last 15 years trying to understand the impact of what my campaigns have been doing. Like most people starting with digital, my initial understanding of analytics and attribution was limited.

15 years ago, there was a lot less talk about measurement and there were not as many tools that promised us that everything could be measured. We, sure enough, didn’t talk about attribution back then!

It was quite simple. I’d run a campaign on Google ads or later on Facebook and then try to figure out if it worked. Conversion tracking? Sometimes.

But mostly, it was a question of trial and error. I’d switch campaigns on and off to see if my sales or leads increased or decreased. I remember this one case where a product I was selling in a webshop suddenly saw a peak in sales.

Google Analytics told me it came from Google ads, through a campaign containing a bunch of generic keywords. Google ads also recorded this. I subsequently expanded the keywords and started advertising quite broadly. Conversion rates dropped and I lost money.

Later, by talking to my customers, it appeared that a competing product was featured on national TV for approximately one minute. It made people search for the product to find out more.

My paid search campaigns captured some of this traffic. Google Analytics, of course, did not tell me that the increased search volume came from the TV feature. It just showed sales were coming from paid search.

“It opened my eyes to how easy it was to make the wrong decisions based on some vague numbers shown on a buggy interface.”

In the last decade, we have often heard that one of the main advantages of digital media is that it is much easier to measure than offline.

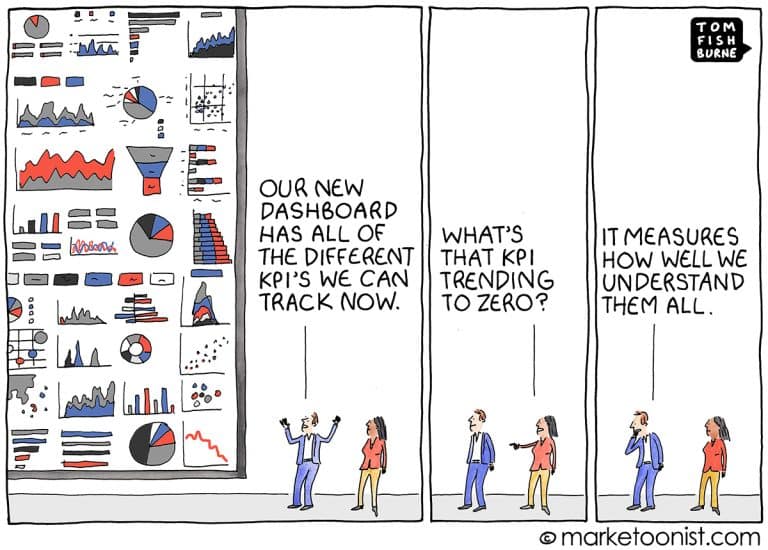

It is a narrative that has been shoved down our throats for years. As a result, people spend hours digging through analytics, google ads and Facebook reports, trying to draw conclusions for optimization.

“If a certain targeting shows sales, you expand it and bid more aggressively. If there are no sales, you cut it away.”

Recently, however, more and more critical voices have spoken out against the measurement methods that have been used for the last years, because of an ongoing decrease in the reliability of cookies.

Brands have also started putting one and two together, based on several years of campaign data and seeing patterns that reporting during the campaign itself wasn’t able to provide.

The below is not intended to be an exhaustive list of measurement methods but contains a few tools and methods you should have in your measurement arsenal :

• Cookie-based conversion tracking and attribution

• Micro Conversions

• Incrementality testing

• Media Mix Modelling

It is important to understand that not any of these methods is a correct representation of reality by itself. Each of these methods can give insights that you need to put one and two together, to avoid spending money on the wrong things.

1/ Cookie-based conversion tracking and attribution

This is the most common way to measure digital media campaigns today, and it is what Google Analytics, Google Ads and most other platforms do.

A cookie is dropped on the device after a view or a click, and when a sale happens the cookie sends a message to the Google Analytics and Google Ads servers, counting a conversion.

Afterwards, attribution modelling allows to compare different attribution models to assess the impact of clicks that are at the beginning of the customer journey compared to clicks at the end, and so forth.

This is commonly used as it is easily available in Google Analytics, Google Ads, Adobe Analytics and so on.

The recommended model for most businesses is a data-driven attribution (DDA) model, where the weight given to a touchpoint basically depends on an algorithm that analyses the role of that touchpoint in all previous customer journeys.

And that is where the cookie crumbles (excuse the pun). The DDA model uses all previous customer journeys that consist of a series of clicks and impressions to calculate the importance of certain touch-points (hence data-driven).

If a large portion of these journeys is incomplete due to the cookie not surviving, the model is no longer correct as it is working with incomplete journeys. This has worsened in the last two years due to developments such as Intelligent Tracking Prevention (ITP) on Safari.

Initiatives such as ITP that reduce cookie lifetime on 1st party cookies to 24 hours or 7 days at best are making accurate tracking using cookies near impossible.

“Concretely, where cookie lifetimes are now shorter than the time needed between click and conversion, we risk heavily underinvesting.”

Let us take the following example:

Pre-ITP – safari user on a desktop, searching for a new gaming laptop:

- On day 0, the user searches for “best gaming laptops”, clicks on a Mediamarkt ad, and discovers the Mediamarkt laptop range. They navigate to a few of the pages. They note the models and prices and leave the site, as they have other things to take care of now. They do not log in and have never purchased on the webshop before.

- On day 3, The user remembers the laptops from Mediamarkt website, so they search on “Mediamarkt gaming laptop”. They click on the organic search results. They see and order the laptop.

Pre ITP:

The generic search ad on “best gaming laptops” would have received full credit in Google Ads conversion tracking for the sale (first click attribution). This would have allowed us to see that we needed to bid aggressively on paid search on this query, as it brought in the visitor who made a purchase.

Post ITP:

The cookie that was dropped between the first and second visit would have expired. Both in Google Ads and Google Analytics, the visitor would be considered as a new visitor the second time they came.

In Google Ads, the first query “best gaming laptops” would not count a conversion, as the cookie that could track the sale would have expired. In Google Analytics, the sale goes to organic search, without any link to the user that made the first search. No attribution model would fix this.

As you can see from the above example, cookie-based attribution can be very flawed. Strong brands and products/services with long customer journeys suffer heaviest from the cookie limitations. However, this method is easy as it gives insights in-flight and can be used to optimize on the go.

When using attribution modelling, beware of several red alerts:

• Have you been over-optimizing on bottom of the funnel touchpoints the last few years? Attribution will show you that these touchpoints work because you don’t have any customer journeys that prove otherwise.

• Do you have a long customer journey? In B2B for example, the time between a first visit and a sale can take months. There is little chance your cookies will survive all that time

• Do you have a strong brand with many offline campaigns? There is a high chance your branded search traffic is your main sales “channel”.

This is however the result of years of being top of mind and doing ATL campaigns. Cookies and attribution don’t know this and will overvalue these touchpoints as they do not capture the difference between a touchpoint contributing to a sale (like most generic search keywords) and a touchpoint simply being used to navigate to a website (like most branded search keywords).

This will lead to these touchpoints being overvalued in attribution models.

However, as cookie-based measurement and attribution modelling provides insights during the campaign, it is a tool we must keep. Just beware of the limitations and choices you make bidding and budget-wise given these limitations.

2/ Micro conversions

A micro conversion is an action on the path to the final conversion that can also be measured.

The advantage of micro-conversions is that they happen fast after the first contact with the website, and as such, they suffer a lot less from the problem of cookies expiring.

An example could be the simulation of a loan – this is typically an action that takes a few seconds and is quickly done.

When we measure upper-funnel campaigns on Facebook or display, this could be a good indicator that we are reaching the right audiences.

It isn’t reasonable to expect someone who you’ve reached for the first time through an ad on Facebook to convert into a sale straight away, so a quick simulation or another indicator of interest can be a much better metric.

These upper-funnel campaigns will seldomly show high amounts of sales when using cookie-based measurement, for the reason mentioned above. The cookie expired before the sale happened and will be attributed to another touchpoint. It makes optimization tough as without other indicators you basically run blind.

Micro conversions fix this to an extent.

It will provide enough data to optimize on and to also report on internally when the use of these campaigns is questioned.

Of course, micro conversions are not perfect. How do we know that by optimizing on a specific micro-conversion, we will also have more sales in the end?

That is the tough part where most people go wrong and the notion of correlation VS causation comes to mind.

• A high amount of impressions can correlate to sales, but not cause it.

• A high amount of simulations can be a good indicator…but not when they are done by bots or people that are not the target group once they see the rates of the simulation.

However, having a clear view of upper-funnel metrics can be a great fallback when attribution modelling alone undervalues certain channels.

3/ Incrementality testing

Incrementality testing is a horribly complicated term that basically means “switching campaigns on and off”. This is a massive vulgarization, but you get the point.

It is my personal favourite.

Not sure something works? Great. Switch it off, see what happens.

Usually, people are afraid to do this as they fear it will impact their sales or leads. The key to doing this sensibly is by organizing a test environment where the impact is limited.

This can be done through geo testing between two areas, where you run a campaign in one area but not another and assess the impact on the baseline of these two areas.

For this to make sense, you need to run the test long enough to take the time of the customer journey into account.

If you want to assess the impact of social ads on a product that has a three-week customer journey, don’t run your test for two weeks…

One of my favourite debates is whether paid search on branded keywords makes any sense. Some swear by it, saying the cost is minimal, others switch it off straight away saying it wastes money.

I tend to favour the latter, but it is always a case-by-case story.

The easiest way to find out? Switch off the branded search campaigns. See what happens to your organic sales.

4/ Media Mix Modelling

Media mix modelling has been around for decades to assess the impact of offline media on sales.

In a nutshell, it is basically using multilinear regression to assess whether certain channels or media have an impact on sales.

For example, this method allows us to spot that TV ads have an impact on sales, regardless of whether the sale happened through a paid search ad as a final touchpoint.

It is immensely valuable as it completely disregards the cookie limitations. However, it would not be a measurement technique if it didn’t come with its own complications.

Media mix modelling is not quickly done. You need two years of solid, clean data and you need the person doing the analysis to have a basic understanding of digital marketing.

I remember a few years back that the analysts doing the modelling on a client of ours did not understand that branded search was not “driving” sales.

Their conclusion was “do more branded search”. So, in order to make MMM work, you can’t just drop the data to them and hope for this to work out.

A second disadvantage is that there is no way to do media mix modelling on the fly – it is done periodically when campaigns are over.

Conclusion

Not one of these methods gives you the full story but combined they have the potential to give you insights you would not have if you only used one of the methods.

• Cookie-based attribution on final sales is great to run during campaigns together with the micro-conversion measurement.

• Media mix modelling allows you to view things from a macro level and can help confirm suspicions certain channels are being under or overvalued.

• Incrementality testing often provides the confirmation you need to close the debate.

If you need help with any of these items, feel free to reach out to us.

Get our ramblings right in your inbox

We deepdive into hot topics across digital marketing and love to share.